Google AI Researcher’s Departure Ignites New Conflict Over Ethics

Google AI Researcher’s Departure Ignites New Conflict Over Ethics

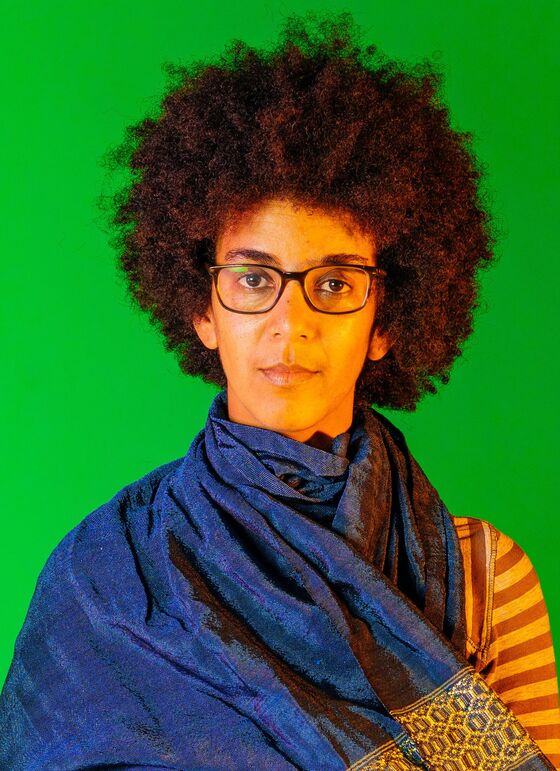

(Bloomberg Businessweek) -- Google has gotten itself into another management crisis. On Dec. 2, Timnit Gebru, an artificial intelligence researcher best known for showing how facial recognition algorithms are better at identifying White people than Black people, said she’d been fired. Gebru’s boss described her departure as a resignation, but both sides acknowledged the conflict centered on Google’s discomfort with a research paper Gebru planned to publish about ethical issues related to technology that underpins some of the company’s key products.

To date, over 2,300 Googlers and 3,700 others have signed onto a petition supporting Gebru. Google Chief Executive Officer Sundar Pichai sent an email on Dec. 9 apologizing to employees for how the company had handled her departure and promising to review the situation, saying it had “seeded doubts and led some in our community to question their place at Google.”

Pichai didn’t seem to have just ethics researchers in mind. Gebru, a Black woman, has been an outspoken advocate for other non-White employees. The two issues—the lack of diversity within the tech industry, and the way advanced software products can harm underrepresented demographic groups—have become increasingly intertwined. Many of the researchers and employees raising concerns are members of marginalized groups that don’t have much power at the company, according to Gebru. “Nobody should trust that they are self-policing their products,” she says. A Google spokesperson declined to comment.

As corporations such as Google push forward with artificial intelligence, there is an increasing agreement that a line of ethical research is needed to weigh the advantages of the technology against potential harms. There’s been a steady stream of incidents where algorithms automatically insert male pronouns when talking about doctors and female pronouns when talking about teachers, or show only photos of Black people in response to web searches for “unprofessional hair.”

While academic institutions have produced some of this research, it is also coming from within research departments at the companies producing the products. Google employs over 200 people working primarily on research into ethical questions, spread among various teams; Microsoft and Facebook have similar operations. There are key advantages to this arrangement, but Google’s attempt to keep Gebru from publishing a paper that could have reflected poorly on its commercial operations highlights its inherent tensions.

José Hernández-Orallo, a professor at the Valencian Research Institute for AI, based in Spain, says it’s likely a lot of internal research doesn’t get pursued or published because of conflicts of interest a desire to protect trade secrets. He compares letting tech companies assess their own products to allowing students to grade their own homework. “Having independent organizations or academia doing this would be desirable,” he says.

Gebru’s most famous work is an example of how research can push companies to change their AI practices, even if some are initially unwilling. While serving as a postdoctoral researcher at Microsoft in 2018, she co-authored a paper with MIT researcher Joy Buolamwini that showed how the company’s facial recognition program misidentified the gender of dark-skinned women 21% of the time, while working perfectly on White men. It showed similar flaws in programs from such companies as IBM. The paper prompted the companies to fix their products.

Google has established ethical research as a permanent aspect of its operations, treating the risk of biases creeping into its products in a similar way to cybersecurity threats. “Fairness is not something that’s one-and-done,” said X Eyee, who’s in charge of a team at Google dedicated to rooting out algorithmic bias, in an interview that took place before Gebru’s firing. “It has to be continuously prioritized, because as society changes, so will those fairness needs.”

Eyee declined to speak about Gebru’s firing, according to a company spokesperson. But the incident has clearly strained an already frayed relationship between Google management and restive factions of its workforce. Jeff Dean, Google’s head of AI, addressed the Black Google Network, a group of employees, soon after Gebru’s departure, but didn’t take questions, leaving some employees feeling further alienated.

“The right thing is to acknowledge that what happened to Timnit wasn’t right,” wrote one employee in a question-and-answer session, adding that the executives at the event had failed to do so. “Without this it is hard for me as a Black Woman to trust Google.”

Dean later canceled what was supposed to be an all-hands meeting with Google Research scheduled for Dec. 15. A group of employees from Google’s Ethical AI team then sent a letter to the company’s leadership, including Pichai. They called for a company vice president, Megan Kacholia, to no longer be part of their reporting chain, and asked Kacholia and Dean to apologize directly to Gebru. They also asked that Gebru be offered a chance to return at a more senior position.

Asked what she plans to do next, Gebru says, “I really want to be in an environment where I don’t have to constantly be drained by fighting and just proving my humanity.”

A seemingly logical solution would be to operate outside of the industry altogether. But there are drawbacks to that model of auditing AI systems, too. Academic institutions don’t have the same resources as corporate research labs and may not have access to all the data they need. Also, the closer a researcher is to the people actually building the products, the more likely she is to actually influence their shape, says Gebru. “It’s important for people to be in these corporations, along with the people who are thinking about the next product,” she says. “But the researchers need to have some amount of power. They shouldn’t just be there to rubber stamp, or as a fig leaf.”

©2020 Bloomberg L.P.